C51¶

Overview¶

C51 was first proposed in A Distributional Perspective on Reinforcement Learning, different from previous works, C51 evaluates the complete distribution of a q-value rather than only the expectation. The authors designed a distributional Bellman operator, which preserves multimodality in value distributions and is believed to achieve more stable learning and mitigates the negative effects of learning from a non-stationary policy.

Quick Facts¶

C51 is a model-free and value-based RL algorithm.

C51 only support discrete action spaces.

C51 is an off-policy algorithm.

Usually, C51 use eps-greedy or multinomial sample for exploration.

C51 can be equipped with RNN.

Pseudo-code¶

Note

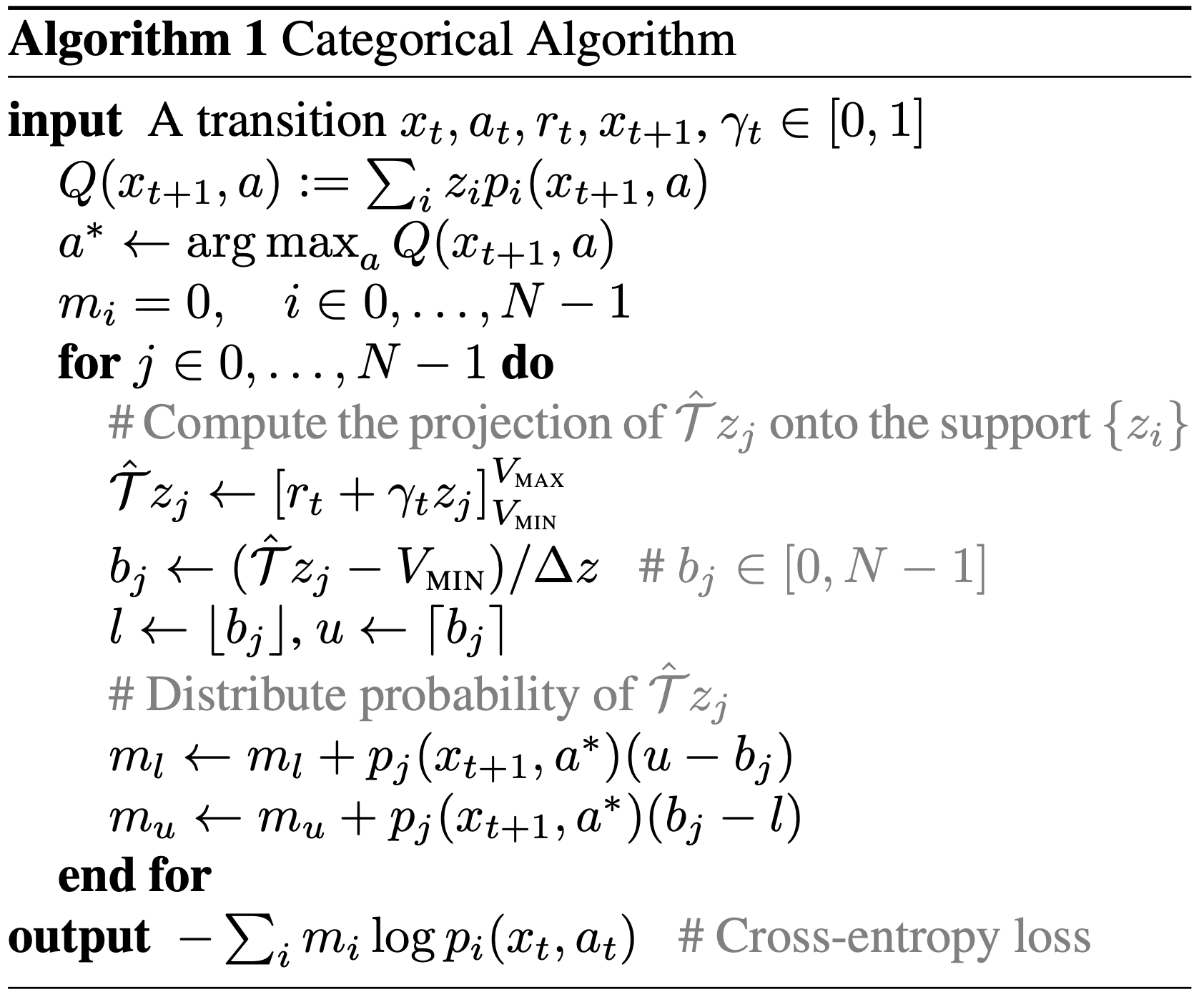

C51 models the value distribution using a discrete distribution, whose support set are N atoms: \(z_i = V_\min + i * delta, i = 0,1,...,N-1\) and \(delta = (V_\max - V_\min) / N\). Each atom \(z_i\) has a parameterized probability \(p_i\). The Bellman update of C51 projects the distribution of \(r + \gamma * z_j^{\left(t+1\right)}\) onto the distribution \(z_i^t\).

Key Equations or Key Graphs¶

The Bellman target of C51 is derived by projecting the returned distribution \(r + \gamma * z_j\) onto the current distribution \(z_i\). Given a sample transition \((x, a, r, x')\), we compute the Bellman update \(Tˆz_j := r + \gamma z_j\) for each atom \(z_j\), then distribute its probability \(p_{j}(x', \pi(x'))\) to the immediate neighbors \(p_{i}(x, \pi(x))\):

Extensions¶

- C51s can be combined with:

PER (Prioritized Experience Replay)

Multi-step TD-loss

Double (target) network

Dueling head

RNN

Implementation¶

Tip

Our benchmark result of C51 uses the same hyper-parameters as DQN except the exclusive n_atom of C51, which is empirically set as 51.

The default config of C51 is defined as follows:

- class ding.policy.c51.C51Policy(cfg: easydict.EasyDict, model: Optional[torch.nn.modules.module.Module] = None, enable_field: Optional[List[str]] = None)[source]

- Overview:

Policy class of C51 algorithm.

- Config:

ID

Symbol

Type

Default Value

Description

Other(Shape)

1

typestr

c51

RL policy register name, refer toregistryPOLICY_REGISTRYthis arg is optional,a placeholder2

cudabool

False

Whether to use cuda for networkthis arg can be diff-erent from modes3

on_policybool

False

Whether the RL algorithm is on-policyor off-policy4

prioritybool

False

Whether use priority(PER)priority sample,update priority5

model.v_minfloat

-10

Value of the smallest atomin the support set.6

model.v_maxfloat

10

Value of the largest atomin the support set.7

model.n_atomint

51

Number of atoms in the support setof the value distribution.8

other.eps.startfloat

0.95

Start value for epsilon decay.9

other.eps.endfloat

0.1

End value for epsilon decay.10

discount_factorfloat

0.97, [0.95, 0.999]

Reward’s future discount factor, aka.gammamay be 1 when sparsereward env11

nstepint

1,

N-step reward discount sum for targetq_value estimation12

learn.updateper_collectint

3

How many updates(iterations) to trainafter collector’s one collection. Onlyvalid in serial trainingthis args can be varyfrom envs. Bigger valmeans more off-policy

The network interface C51 used is defined as follows:

- class ding.model.template.q_learning.C51DQN(obs_shape: Union[int, ding.utils.type_helper.SequenceType], action_shape: Union[int, ding.utils.type_helper.SequenceType], encoder_hidden_size_list: ding.utils.type_helper.SequenceType = [128, 128, 64], head_hidden_size: Optional[int] = None, head_layer_num: int = 1, activation: Optional[torch.nn.modules.module.Module] = ReLU(), norm_type: Optional[str] = None, v_min: Optional[float] = - 10, v_max: Optional[float] = 10, n_atom: Optional[int] = 51)[source]

- Overview:

The neural network structure and computation graph of C51DQN, which combines distributional RL and DQN. You can refer to https://arxiv.org/pdf/1707.06887.pdf for more details. The C51DQN is composed of

encoderandhead.encoderis used to extract the feature of observation, andheadis used to compute the distribution of Q-value.- Interfaces:

__init__,forward

Note

Current C51DQN supports two types of encoder:

FCEncoderandConvEncoder.- forward(x: torch.Tensor) Dict[source]

- Overview:

C51DQN forward computation graph, input observation tensor to predict q_value and its distribution.

- Arguments:

x (

torch.Tensor): The input observation tensor data.

- Returns:

outputs (

Dict): The output of DQN’s forward, including q_value, and distribution.

- ReturnsKeys:

logit (

torch.Tensor): Discrete Q-value output of each possible action dimension.distribution (

torch.Tensor): Q-Value discretized distribution, i.e., probability of each uniformly spaced atom Q-value, such as dividing [-10, 10] into 51 uniform spaces.

- Shapes:

x (

torch.Tensor): \((B, N)\), where B is batch size and N is head_hidden_size.logit (

torch.Tensor): \((B, M)\), where M is action_shape.distribution(

torch.Tensor): \((B, M, P)\), where P is n_atom.

- Examples:

>>> model = C51DQN(128, 64) # arguments: 'obs_shape' and 'action_shape' >>> inputs = torch.randn(4, 128) >>> outputs = model(inputs) >>> assert isinstance(outputs, dict) >>> # default head_hidden_size: int = 64, >>> assert outputs['logit'].shape == torch.Size([4, 64]) >>> # default n_atom: int = 51 >>> assert outputs['distribution'].shape == torch.Size([4, 64, 51])

Note

For consistency and compatibility, we name all the outputs of the network which are related to action selections as

logit.Note

For convenience, we recommend that the number of atoms should be odd, so that the middle atom is exactly the value of the Q-value.

Benchmark¶

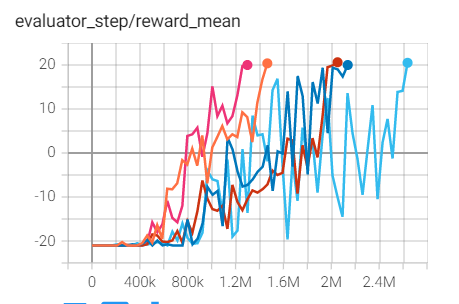

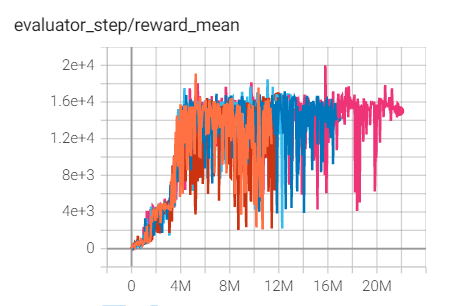

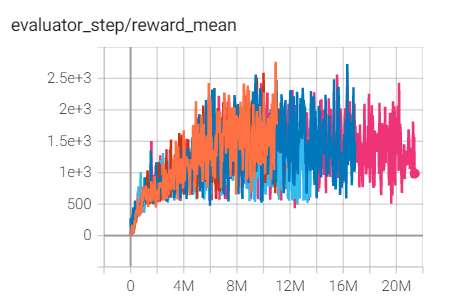

environment |

best mean reward |

evaluation results |

config link |

comparison |

|---|---|---|---|---|

Pong

(PongNoFrameskip-v4)

|

20.6 |

|

Tianshou(20)

|

|

Qbert

(QbertNoFrameskip-v4)

|

20006 |

|

Tianshou(16245)

|

|

SpaceInvaders

(SpaceInvadersNoFrame skip-v4)

|

2766 |

|

Tianshou(988.5)

|

P.S.:

The above results are obtained by running the same configuration on five different random seeds (0, 1, 2, 3, 4)

For the discrete action space algorithm like DQN, the Atari environment set is generally used for testing (including sub-environments Pong), and Atari environment is generally evaluated by the highest mean reward training 10M

env_step. For more details about Atari, please refer to Atari Env Tutorial .